Knowing what we need to know, when we need to know it

Diana Beveridge is responsible for the delivery and continuous improvement of the Scottish Improvement Leaders (ScIL) Programme, developing individuals to lead and support large-scale improvement initiatives across Scotland’s public services. She is also an Improvement Advisor within Scottish Government where she provides support to areas of work with strategic priority, including Permanence and Care Excellence (PACE) programme to improve outcomes for looked after children.

It’s true - you can’t fatten a cow by weighing it. Although we talk a lot about measurement and the power of data in our work to improve the permanence process for children, it's not the holy grail. Not on its own anyway.

Don’t let anyone tell you any different - people and relationships are at the heart of improvement. But, we also need to understand how the system woven around looked after children works; its strengths, vulnerabilities and weaknesses.

We need the ability to learn what makes that system, its processes and relationships, better. And understand what has no impact and what may even make things worse.

Yet, I wonder if you have ever implemented an idea, policy or guideline and spent months sticking with it while suspecting it wasn’t making a blind bit of difference, or perhaps you were unsure as to whether it was, in fact, the best approach...? I don’t think you’d be alone.

And that’s where data for improvement comes in

Data for improvement serves a particular purpose. A purpose that is different to that of data for research – we often need research data to understand what we need to do differently and why. For instance, research tells us that it’s damaging for children to spend a long broken up journey on the route to permanence. We don’t need to reprove that.

It’s also different to accountability data, where the data is used to judge and provide assurance. We’re all accountable to someone... our boss, our boss's boss, the people of Scotland, the children we are doing this for. And, is it unreasonable that they’d be interested in how long it takes children to get through the system and whether that is good enough? So accountability data can often act as the ignition for change

Data for improvement serves a different purpose, though. It tells us what we need to know about how things are working, when we need to know it, so that we can act.

And with that different purpose comes a different approach

Imagine a child learning to swim. She needs to know quickly if kicking her legs helps her to float or causes her to sink. There’s no time available to do a lengthy study on it, and I doubt providing a performance report to her mum detailing how far she’s travelled across the shallow end will have much of an impact!

She needs real-time data, right at the point when that learning is going to mean something and she can adjust things for the better.

It’s when we get that dynamic data, focused on the important things we need to influence in our system, and gathered over time to support learning, that we are measuring for improvement.

This data doesn’t have to be perfect - just good enough. Hold on to that thought, as I know from working across a number of Permanence and Care Excellence (PACE) areas, that gathering data can be an area of trepidation for many.

Aberdeenshire's journey

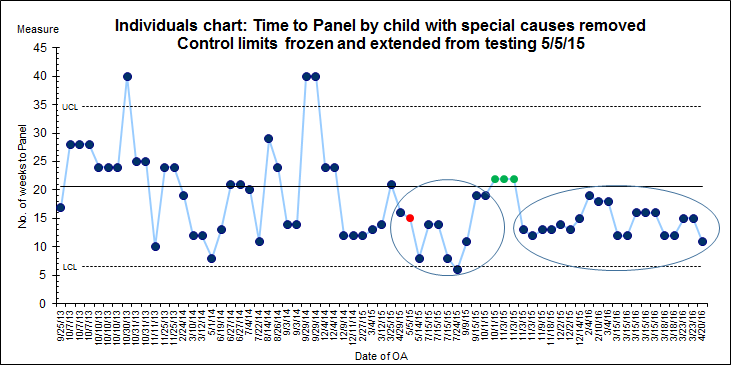

And that’s why I’m sharing a chart from Aberdeenshire, one of our early PACE areas.

This area went from having no data and struggling to know where to start to being able to track each child in the system. Each data point below is one of their children.

They can show exactly how long it has taken from child’s plan to a decision being made at panel. And importantly, they have been able to tell whether the changes they were trying helped speed up the process, without causing things to go belly-up elsewhere in the system!

The approach hasn’t involved any sophisticated IT solution - it’s based on a simple monthly return providing information on changes they are trying, and details on how children have travelled through the system. They put this into a spreadsheet.

Of course, there has been upskilling involved in helping local teams develop meaningful measures and develop the skills to be able to chart and analyse these – but why wouldn’t we invest in this?

I’d imagine even the least data experienced amongst us would look at this and conclude that whatever Aberdeenshire is doing, it’s working and they should keep doing it.

In fact, they have data that sits beneath this process step measure that tells them what changes have worked and when. It includes simple qualitative data like social worker feedback on process changes. It’s helping them stop doing what doesn’t help the child, and to do more of what does.

And they are not pulling it once a year for their CLAS return but looking at it as children progress through the system to learn and take the action when it is most needed. Just like our little girl in the pool – getting the right information at the right moment to help a child.

Tracking children isn't enough

As well as tracking children to provide data for learning, knowing where our children are against milestones has emerged as a key part of what’s needed to improve the permanence journey.

Much like the cow who won’t fatten by measurement alone, we won’t make the journey better for children just by tracking them.

But, given the complexity of the system that surrounds children as they move towards permanence, I wonder if it might feel to them like being in a forest, not quite knowing when they will come out, what path they will take, or where they will stop off along the way. So shouldn’t we at least know exactly where every child is and how long they’ve been there?

I know in some areas a lot of data exists, but wonder if it’s telling you what you need to know, when you need to know it? And I'll leave you with this thought from Mary Dixon-Woods (Jan 2014)

If you’re not measuring, you’re not managing

If you’re measuring stupidly, you’re not managing

If you’re only measuring, you’re not managing

Diana Beveridge

Twitter: @DianaBeveridge

The views expressed in this blog post are those of the author/s and may not represent the views or opinions of CELCIS or our funders.

Commenting on the blog posts

Sharing comments and perspectives prompted by the posts on this blog are welcome. CELCIS operates a moderation process so your comment will not go live straight away.